I like Herb, you don't get to be chair of WG21 for over a decade without being a sufficiently nice guy - and he is. I'd count him a friend if chairs were really allowed to have friends/preferences. His perspectives on C++ demand/growth/iteration are worthwhile to read, here. The doomsaying about C++ often ignores the very real gap that it and Rust fill, which no other languages sufficiently meet - high performance, low latency, minimal overhead.

Having said that the appendix to the article contains some opinions I find disquieting. The techno-positivism about AI is misplaced - there are no upsides, outside of programmer and medical work. It is destroying academia by allowing students to avoid writing and thinking for themselves, destroying communication by allowing people to avoid displaying themselves in honest and authentic ways, destroying music (~50000 AI-generated songs are presently being uploaded to Spotify per-day) by whitewashing the landscape with mediocre slop, and has already begun destroying the careers of visual artists by making them inconvenient. It is, of course, also destroying the internet and making electricity more expensive for everybody.

Anyone who argues otherwise either has skin in the game on the wrong side, or has no skin in the game at all. It reminds me in some ways of the misplaced passivity around piracy in the 00's, where people would try and convince me that copying 12 albums onto a USB stick within 1 minute was the same as spending an hour copying 1 album onto a cassette tape in the 80's. It wasn't of course, and it destroyed what was good about the music industry. I'm a bit tired of holding my tongue or being laissez-faire about it. Either you oppose it, or you're on the wrong side of the fence.

Recommended:

Overall I agree with the video. The problems it portrays, while hyperbolised, are real and accurately described - for the most part. The language is iterated upon by C++ language experts, who are not always external domain experts or good computer scientists. Don't get me wrong - there are some very smart people on the committee and people who're involved heavily in their given domains, but C++ has become so complex now that it requires extensive domain knowledge *as a field itself*. So you actually need those C++ experts to navigate it. Hence there can be a somewhat incestuous element at play. What the video does right is exposing to C++ users the abrupt and stark comparison between it and other languages, rather than looking at C++ in a vacuum. It assumes the viewer is not someone with a lot of experience with languages outside than C++, and is possibly correct in terms of the majority of people who will be viewing the video; and I think it does the world a service, in that way.

But here's some of the things the video gets wrong:

With all that being said, I acknowledge that the C++ infrastructure is horrible; his main point. I get around that personally by:

But I use this language for a reason. Things I like about C++:

Subjective appreciations and depreciations aside, my expectation is that over time many safety-critical infrastructural things will be replaced with Rust code or similar. However anything where performance is paramount and safety secondary, will stick with C++, or something like it. This may include gamedev, financial stuff, and physics simulation, etcetera. I was personally surprised the video didn't mention Jai, but to be honest that language might as well be dead (given that it's been in closed development for ~10 years with no public beta).

But also... worst?? Has he seen Perl? (or Brainf***?) There are worse, for sure.

Managed to improve the algorithm for reserve so that, instead of allocating too many elements when the remainder of requested_capacity % block_capacity.max is smaller than block_capacity.min. In most cases the resultant capacity should be much closer to the requested amount, if not exactly the same. For example, before if you had an empty colony/hive with min/max block capacities of 50/70, and you called reserve(150), it would allocate 190 (2 x max + 1 min). Now it allocates 150 (3 x 50). The result is actually microscopically faster due to the lower amount of allocation.

In addition some exceptional scenarios - pointed out by bloomberg - have been addressed in reserve(). Specifically when calling reserve(n) when n <= max_size() would bring capacity over max_size() due to the prior capacity and block capacity limits. And I found a weird bug in range fill insert/construction that I'm not even sure how it got there :/

next_one(index), prev_one(index), next_zero(index) and prev_zero(index) have been supplied for bitset/bitsetb/bitsetc - each adds an optimized way to return the next/prev zero/one in the bitset, before/after the supplied index number. The same speedups expected from first_zero/etc are also in these functions. The plf::bitset ones are noexcept and all are constexpr-capable.

I wrote a paper which goes into this in depth. For a particular application I was thinking about the fastest way to calculate the first 1 in any series of bits. The linear response is of course, scan from the front of the row of bits, and find the first one. The secondary way is to tackle it at the word level - that is, for a 64-bit platform, scan the words containing the bits until a non-zero word is found, then go into that word and use either std::countr_zero or manual counting to find the first 1 within that word quickly. This is how plf::bitset does it.

This is all well and good, until you start to run into very large bitsets. At that point, even the word-level scan takes a long time. But what if you use another, secondary bitset, on top of that bitset? So, taking that first set of words, notate in a secondary bitset the words which are non-zero. That is, for every 64 bits (assuming a bitset using 64-bit integers), you get 1 bit in the secondary bitset. 0 represents a zero word in the first layer, 1 represents a non-zero word. Now you can scan this 2nd bitset layer on a word-level - with every word representing 4096 of the first bitset's bits - until you find a non-zero word on that layer, and then use std::countr_zero to find the first non-zero word in the first bitset, then use std::countr_zero on that word to find the first bit.

Now the fun thing about this is that you can scale it up as much as you want. For the 2nd bitset, you're effectively scanning 4096 1st-layer bits per word - for a 3rd layer of bitset, you're scanning 262144 1st-layer bits per word - for a 4th layer 16777216 bits, and so on. What this means is that for a 1073741824 length bitset, you could, with a 4-layer system, find the first non-zero bit in the bitset within 64 comparisons plus 4 calls to std::countr_zero (usually maps to a CPU intrinsic).

Or you can tweak it and say that at the 2nd layer each bit represents 4 words - so you're scanning 16384 bits per 2nd-layer word. There's a load of room for manipulation. The (not so) tricky part is updating the layers - basically if going from zero to non-zero or vice-versa at the word level on any given layer, this has to be updated in the layer above - and then up through the rest of the layers as necessary. You can also set these up to search for zeroes instead, or both zeroes and ones - but more on that in the paper above.

And it goes without saying that every additional layer is 64 times smaller than the 1st. So for the example above with 1073741824 bits in the first layer, or 16777216 words, you're looking at an additional 262144 words for the 2nd layer, 4096 for the 3rd layer and 64 for the 4th layer. So there's an inverse relationship between the amount of memory you spend and the amount of optimisation gained for each layer, which is strange but makes sense.

Well, I suppose I should note that hive ie. colony but with a buzz, made it through plenary. So it's in C++26, basically. This is good. It's weird how little I feel about it. Not in a bad way, but just in a 'huh' way. Okay so that happened. I guess the fact is that by the end of it, the paper was so much more a team effort. There's still a little bit of work to do. Not on the paper, just the implementation. I'd like to thank everybody I thanked in the paper, but, like, again. It's done.

I needed a bitset for something so I wrote one - well, two actually. And then later, three. The second was more a matter of realising I could improve on the std:: library implementations a bit. So, introducing plf::bitset (the second one), and plf::bitsetb, being the one I developed initially. The latter takes a user-supplied buffer and size as constructor arguments, meaning, you can treat any block of memory as a bitset. Both bitsets take the storage type as a template argument, so in most cases you can use your external buffer without reinterpret_cast'ing the pointer to it (so long as it's a trivial POD type). The template argument also allows you to reduce the storage size of your bitset to the smallest possible type ie. a byte, meaning you won't get as much wasted memory if your bitset size doesn't happen to align exactly with your systems word type (like you would with std::bitset). plf::bitsetc came late in development and is basically a C++20-only custom version of bitsetb which allocates it's own buffer on the heap instead of taking a user-supplied on. How this can be useful vs plf::bitset is explained on the project page.

The bitsets also introduce new features: set_range and reset_range, which set a range of indexes to 1 or 0 respectively, improving upon doing the same thing individually using set(pos)/reset(pos) by around 34652%/67612% (GCC/MSVC, O2 AVX2) on average. There are also optimized functions for finding the index of the first or last 1 or 0 in the bitsets, and a non-allocating swap() function (using the XOR method). Lastly, I provided additional to_string functions to obtain the string in the correct, index-oriented way - not backwards.

plf::bitset hosts it's own stack-allocated buffer, like std::bitset, and is more a drop-in replacement for the latter. More details on all three bitsets can be found on the page. All are under a new license: the Computing For Good license. This is more-or-less an ethically-oriented version of the zLib license, so it's still fairly liberal, moreso than GPL but less-so than zLib. As I explain on the license page, all software licenses are ethical, this one just happens to go outside the typically restraint of only focusing on intellectual property rights.

In an ideal world, licenses such as these would not be necessary. But when major tech corps are polluting, stealing user data and violating copyrights left-and-center, it's pretty obvious that the tech industry is not suitable for establishing moral compasses. Seeing the turn of events in recent years has left me wondering why I got so hung up on the positives of tech back in my 2016 speech. If I'd known that the 3 wealthiest tech people in america would be richer than the poorer 50% of the country combined, and that they did nothing of value with their money, I would've stopped right there. Colony would never have been born. But here we are, and it's up to the smaller people, to say no, where and when applicable.

I realised a while ago that info on how to do benchmarks properly is relatively scarce. Here's some brief tips, geared toward small-scale CPU-based benchmarking (as opposed to heavy-duty apps or graphics-oriented apps like games, where the OS background processes play less of a role in results). Most of them are generic, some are specific to C++ and the various compilers:

rand() % power_of_2 to rand() & (power_of_2 - 1) if this is faster.Thee was a misunderstanding about how typedefs affect constness expression in colony/hive/etc. Basically you'd think that:

typedef group * group_pointer_type;

const group_pointer_type current_group;

Would equate to const group * current_group;, but it doesn't. Basically it's not a macro expansion, it's treated as a type separate to group *. Hence it actually becomes group * const current_group;, effectively; ie. a constant pointer to a non-constant group. Anyway, rather than mixing the east/west constness in the files I decided to just go west const. Not a big deal, but I do get rather tired of finding this sort of funky edge-case in this language. Would that it were simpler.

Revisiting python after, let's say, a 26-year absence, it's fascinating to see it in terms of what I've learned about languages from C++ and about performance in general. The fact that everything is an object yields great results on the user front, incredible usability, but terrible results on the performance front for the obvious reason that processors like single functions run on multiple bits of data at once, in batch, not individual functions running adhoc on individual bits of data. OO leans toward the latter. The other thing I think about extends from what I detest about 'auto' - the fact that you're shifting cognitive load onto the code viewer to infer the object type, all for the benefit of saving a few measly keystrokes, and reducing code readability in the process; python does the same thing, but, for everything.

This has broad implications. While python code looks easy to read (and I approve of whitespace-based formatting), so much is hidden that if one wants to infer performance-related information, you have to basically convert everything in your head to see what is being done implicitly - whereas in C++ it is, by and large, written explicitly. It definitely has it's place, and its a fascinating language as a thought experiment along the lines of 'what if we take object orientation to it's logical conclusion?'. And an excellent replacement for all the unstructured, crappy languages that came before it eg. Perl. But it will never be the language of choice for performance code.

The R26 revision of the std::hive proposal (ie. colony but C++20-only and with some differences) is being polled in LEWG for forwarding onto LWG. This revision is the result of a months gruelling part-time refactoring, basically reducing the non-technical-specification sections down to bare essentials, streamlining delivery, removing repetition and any mistakes found. As a consequence it is some 10kb (4%) lighter than before, despite containing additional data for implementors. This is, I believe, one of the few times a C++ paper has gotten significantly Lighter in a subsequent revision - down to a 'mere' 28351 words. I am more proud of this than I should be. If you had asked me back in 2016 whether I would've been willing to write a thesis-length document in order to promote this thing into the standard library, I would've laughed. But there it is.

The talk basically covers all the stuff I do/have done which isn't colony/hive - including a refresher on plf::list. There's also some rambling about time complexity and it's deep irrelevance to most situations nowadays. I do understand the idea behind it - making sure that you can't have odd situations where, at worst, power of 2 complexity can arise but still - it's by-and-large irrelevant now.

I've been reading this book, which is a surprisingly good read for a programming book - clear, concise, with a sense of humour and mature sense of perspective on life (it is unsurprising how often the latter two contribute to each other). It articulates things I already knew, but also gives helpful advice on situations I am less familiar with. There's one thing it missed however, which is 'don't name stuff the same as other stuff'.

I had a situation in code today where within a given function, two variables, one a const clone of the other for backup purposes, since the original value was going to be overwritten, differentiated only by a single character. Specifically, a '.' for the original member variable, and a '_' for it's clone. This was bad! Later on in the code - in fact, the final line - I'd accidentally run a function on the original which was meant to be run on the clone. This would've had potentially bad consequences in the off-chance that the edge case was reached. Many heads may've been scratched systemically and simultaneously.

I am writing this on an HP laptop keyboard, and they are terrible, so if I've misplaced a character here, blame Hewlett-Packard, but in the above case I had no-one but myself to blame. To fix this, I fixed the line, but that was the superficial part of the fix, really. The real fix was to realise that this sort of similarity-naming, while appropriate, can be confusing, and to stop doing it. Hopefully I haven't repeated this pattern too much. Don't Name Stuff The Same!

A user suggested allowing predicate-based versions of unordered_find_single, unordered_find_multiple and unordered_find_all, to match those found in the standard library - this's been implemented. The original functions work the same, but now you can supply a predicate function instead of a value.

Further developments within reorderase: introspection into the nature of erase_if (which is, really, just a partition with an inverted predicate followed by a range erasure) led me to realise that the algorithm I'd developed for reorderase_scan_if (the order-unstable equivalent of erase_if) could be used for partitioning in general. To be clear, I'm talking for bidirectional iterators and upwards, not forward iterators - the forward iterator algorithm I have is the same as those across the major vendors. Further thoughts:

All std::partition methods for non-forward-iterators are the libstdc++ code, just slightly obsfucated. Mine is slightly different. libc++ takes libstdc++'s code and rearranges the ops (and arguably makes it more elegant) while MS's version takes the libc++ version and makes it less elegant, and kinda funny (for (;;) instead of while(true). Well, I laughed). Since mine derived from working on an unstable erase_if, before I worked out the inverted connection with std::partition, I came at it fresh. The only real difference is that one of the loops is nested, which actually removes one loop in total from the equation.

The performance results are as follows: all over the place. That's not due to lack of benchmarking proficiency, though I did have to do some work there to eliminate cache effects. I believe (but do not know for sure) that it's because branch prediction failure recovery cost (say that nine times fast) and loop efficiency differ significantly from architecture to architecture. In addition, type sizeof plays a huge role in the gains measured. The larger the size of the copy/move, the less relevant the effects of the differing code are, and the largest differences are seen with smallest sizeof's.

Generally-speaking, what I can say is that on computers older than 2014, my algorithm performs better - and the older the processor, the better it performs (for example: 17% faster on average for core2's). However, on newer machines, the algorithm which all the major vendors use performs better - generally (there are some outliers - a 2019 Intel mobile processor works better with my algo, for example). All I can say for certain is that you should check on your own architecture to find out.

Now, destructive_partition. This was a suggestion from someone in SG14, though I'm not sure that it has a major use. Basically it does the same thing as my 'order-unstable erase_if' (reorderase_scan_if) in the sense of moving elements rather than swapping them around, hence you end up with a bunch of moved-from elements at the end of the range. What is this good for? Unsure. But inspired by the idea, I came up with sub-range based versions of unordered std::erase_if/std/::erase, which clears a range within the container of elements matching a given predicate, and moves elements from the back of the container to replace them - again, making it the operation's time complexity linear in the number of elements erased.

As to whether erasing from sub-ranges of containers is a common practice, I cannot say - but the possibility is there, now. The functionality comes in the form of overloads to reorderase_all/reorderase_all_if, with the additional first/last arguments. In addition the following boons have been granted to the regular full-container overloads of reorderase_all/reorderase_all_if - they're faster and supports forward-iterator containers like std::forward_list now.

As things so often happen, I chanced upon a concept in a talk by someone else that changed my conceptual boundary for what indiesort could do - not in a big way, mind you, but in a useful way at least. That talk was Bjarne's from earlier this year, and it detailed a very basic idea for sorting a std::list faster: copy the data out into an array, sort the array, copy it back. "Huh", said I. That makes a lot more sense than what I'm doing, at least when the type sizeof is very small - specifically, smaller than 2 pointers. That means it's smaller than the pointer-index-struct which indiesort was using to sort the actual elements indirectly. But copying the elements out and copying them back is also an indirect form of sorting, isn't it?

On realising this I incorporated it into indiesort, for types smaller than the size of 2 pointers. There was no performance improvement, just a memory usage reduction for the temporary array constructed in order to sort the elements. In addition, I was able to apply the same ability to plf::colony (which uses indiesort internally for it's sort() function). I contemplated adding it to plf::list, which uses the precursor to indiesort (it changes previous/next list node pointer values rather than moving elements around), but this would make plf::list less-compatible with std::list. By doing Bjarne's technique, you end up moving elements around instead of changing next/previous pointer values - meaning that pointers/iterators to existing elements would now point to different elements after a sort is performed.

I did try incorporating the behaviour into plf::list, and there was a performance increase of up to 70% (average increase of 15%) across small scalar types (depending on the type and the number of elements being sorted) with the behaviour present. But, of course, the original behaviour is faster/more-memory-efficient with larger elements, and what good is an algorithm which has one guarantee (element memory locations stay stable after sort()) for one type and not for another? Might ask the community. But I overall prefer the idea of being as-much of a drop-in replacement for std::list as possible (lack of inter-list range-splice notwithstanding). [EDIT/UPDATE: I've decided to keep plf::list's sort technique the same, to maximise std::list compatibility, however if someone wants this performance increase ie. up to 70% faster sorting for small scalar types, they can get it by using plf::indiesort with plf::list.]

colony is updated - specifically, I removed one strictly-unnecessary pointer from the block metadata which made some things easier. It took a looooong time to find a way to get everything as performant as it was with the pointer, but now that it is, I'm pretty happy with it. Performance is slightly better for scalar types, and I should have some new benchmarks up at some point. The downside of this breaking change is that advance/next/prev no longer bound to end/cend/rbegin/crbegin, which means you have to bound manually using a </> comparison with your iterator and setting it to end/cend/rbegin/crbegin if it's gone beyond that. In addition plf::colony_limits has been renamed back to just plf::limits. In future I might end up using the same struct across multiple containers, hence the change. Lastly there was a bug in is_active in that if you supplied an iterator pointing to an element in a block which had been deallocated, but then the allocator had used the same memory location for providing a new block, it could cause some issues. That's fixed.

queue and stack are updated - they have iterators/reverse_iterators now, including all the const varieties. Why iterators? Debugging purposes, mainly. It's something people were asking for as far back as 2016, and it didn't take too much effort.

reorderase has new optimisations for range-erase (reorderase(first, last)) and deques. Basically if the range begins at begin(), reorderase will just erase from the front (basically a pop-front for a deque).

indiesort now chooses the appropriate algorithm based on whether the iterators are contiguous or not, at least under C++20 and above. In addition, for non-random-access iterators/containers, if the type size is small and trivally-copyable, it will switch to simply copying the elements to a flat array and sorting that, then copying the elements back. This idea was inspired by this talk by Bjarne Stroustrop.

The rest are much the same.

See the project page for the details, but basically reorderase takes the common swap-and-pop idiom for random-access-container erasure, optimises it, then extends it to range-erasure and erase_if-style erasure. It performs better on larger types and larger numbers of elements, where the O(n) effects of typical vector/deque/etc erasure have strong consequences. The results, on average across multiple types, numbers of elements from 10 to 920000, are:

As a side-note, thinking about making a paper for this. Though my experience with the C++ standards committee so far has not been exactly rewarding, I believe that more people need to know about and understand these processes.

An additional section in the Low-complexity Jump-counting skipfield paper now details how to create a skipfield which can express jumps of up to std::numeric_limits<uint64_t>::max() using only an 8-bit skipfield. There is a minor cost to performance, and introduction of a branch into the iteration algorithm. See "A method of reducing skipfield memory use" under "Additional areas of application and manipulation".

While I am off twitter generally nowadays, occasionally I dive back in to see what the latest bollocks is, and spotted John Carmack's ridiculous post supporting absolutist free speech. While I support Carmack's views as far as programming goes, I find him naive sociologically - and perhaps his perspective is the result of being a thoroughly empowered individual in his society (which he has absolutely earned through his talent). There seems to be ongoing discussion - primarily in America and primarily on social media - debating the worth of free speech. As a NZ'er I always find this a bit awkward, as Americans are, by and large, unaware of how much of a non-event this is internationally.

That is to say most countries with some small exceptions, do not glamourise freedom nor hold freedom of speech as an ideological goal. NZ for example was predominantly founded on ideals of fairness, not freedom - there have been books written about this and the divergence with American ideals. As far as the results of this go, New Zealand always rates near the top of the charts in terms of international standards of individual freedom. America languishes far behind. Why is this?

Well, it turns out that when you drive toward freedom as your central goal, you play down the aspects of fairness which empower individuals in a broader egalitarian way, and entrench power in the hands of those who already hold it. In other words, a society which values freedom above fairness will always result in more freedom for those with the most power. A well-respected, wealthy, well-known individual can always use their clout, power and money to shut down discourse against their activities, without fair checks and balances in place (see: Twitter).

So I am not a massive advocate of free speech. I see the benefit, but much like an emphasis on freedom in general, it tends to result in more freedom for the powerful and less for the less powerful once taken as an ideology. This is not acceptable. Neither am I an advocate of overly kind speech, which once taken as an ideology, tends to result in untruths being spoken to ameliorate the timid or catatonically-reactive. Which is neither kind nor useful, in terms of longer-term outcomes. People need their boundaries and preconceptions pushed, sometimes. What I am in favour of is fair speech, which is, as an ideology, an attempt however flawed to match appropriate action to the given situation, as it occurs.

An example: someone insults you. Free speech response: you can say whatever you want in return. There are no boundaries, which means you can escalate the situation, if you choose to - it all comes down to the individual - that's your right. Free speech advocates sometimes attempt to legitimize the negative outcomes of free speech by declaring "freedom of speech doesn't mean freedom from consequences". This is such an idiotic argument. Typically the negative effects of free speech are on those being spoken at, not the speaker. The higher the power level of the speaker, the less likely negative effects on them are, which means those in power are typically emboldened to be as obtuse and unfair as possible (see: a certain ex-president).

Kind speech response: you are kind and do not react, but neither do you push back against the aggressor. This tends to evolve into passive-aggressive situations, and typically an outcome of gradually socially 'pushing-out' troublesome individuals. Which may work out sometimes, and sometimes it may not. But often it results in the kind of 'cancel culture' over-reactivity which has become so common and toxic to intelligent discourse over the past 2 decades. The end result of which is the same as the result for free speech, only with the power dynamic inverted - those perceived as 'less powerful' are emboldened to be obtuse and unfair.

Fair speech response: balancing the situation against the needs of the aggressor (they may have a legitimate reason to be angry, regardless of how poorly they're handling it), versus your needs (you have limits too - and you might perform worse if they push further), versus what is best for society (if you don't push back, is this person going to do the same thing to someone or something else? Or would pushing back reinforce their negative habit?), what you might do could be 'aggressive', 'assertive', or it could be 'timid' or neutral. The point is not to reach for an set response, but for an appropriate response. A truly kind response can be a hug (metaphorically), or it can be a slap (again, metaphorically). Some people need boundaries, because they can't create them for themselves, or haven't had healthy boundaries demonstrated to them. We don't know, so we guess, make judgements and do our best to rise to the situation appropriately.

Discourse then becomes not about who has the most or least power in the dynamic, but about what is fair between the given individuals or groups. Reaching for an ideal of kindness or freedom-based individualism is not terribly practical, and in a sense is a partial foregoing of one's social responsibility. Those who don't understand the potential value of harsh responses in a social context, I refer to this talk by Jonathan Haidt. Fair speech contains an implication of fairness in terms of ones balancing of individual responsibility with social responsibility. Everybody has their own concept of what is fair and unfair, so it's certainly a grey area with no consensus there, but it's a better North Star to guide towards than kindness or freedom. IMHO.

Colony now includes static constexpr size_type max_elements_per_allocation(size_type allocation_amount) noexcept, which is basically the sister function to block_allocation_amount. Given a fixed allocation amount, it returns the maximum number of elements which can be allocated in a block from that amount. Since colony allocates elements and skipfields together, this isn't something one can work out merely from the sizeof(element_type). This could be useful with allocators which only supply fixed amounts of memory, but bear in mind that it is constrained by the block_capacity_hard_limits(). Which means an allocator which supplies a very large amount of code per allocation, would still only be able to create a block of 65535 elements max (or 255 for very small types), and max_elements_per_allocation will truncate to this.

Philipp Gehring pointed out to me that currently colony, hive et al won't compile if gcc/clang is used and -fno-exceptions specified. I've fixed this, and as a bonus the fix also works with MSVC, which means that if no exceptions are specified in MSVC/clang/gcc (a) then try/catch blocks won't be generated and (b) throw statements change to std::terminate statements. So you get slightly more useful behaviour under MSVC, and the ability to compile in gcc/clang. This change affects hive, colony, list, queue and stack. In addition a couple of unrelated bugs were quashed for colony.

Twitter was always completely sh*thouse. There is a particular sickness of the modern century which seems to result in adults acting like someone's punched their mum in response to relatively banal first-world problems, and the house in which that disease was spread was primarily social media, but really, mostly Twitter. So no big loss? But yeah, no Elon Musk for me.

I looked up at the night sky a few months ago, from a location I won't name, but which has fairly astronomical views in a literal sense, and in the early hours saw a trail of lights litter across the sky in single file. When I was a teenager I saw a daisy plant in a field which had been affected by spray drift from a hormonal pesticide - actually I felt it before I saw it, a massive knowing of 'wrong' eminating from a particular point in the field, only when I got closer I noticed the plant was twisted and warped, with roughly 50 daisy stems growing together, culminating in an ugly half-circle head of those daisy flowers growing as one horrific abomination. Elon Musk's 49 satellites were like that. A great sense of wrong.

There's something callous, even cruel about the idea of using the night sky, something which belongs to no-one, as a fairly blatant way to advertise your product. There's something ego-maniacal about it. I've come across worse people, but at least in terms of services and programs, I won't be using anything by someone who perverts the night sky like that.

And that was *before* all the subsequent bullcrap. I mean, come on. Using your position of power to call out your preference for governance, and in such a low-brow and hypocritical way. Reinstating a clown-in-chief account which was used to pervert due process. And just generally being a dork. So yeah. I had the option to sign up to starlink at my new location, but it got taken away from me and I was glad it did. Nothing's worth that.

I'll always admire pre-drugs Musk - Tesla has done some great work in the world, even Paypal for all it's early illegality was eventually a force for good, or at least less-evil than the alternatives.

This brings colony up to date with the changes in plf::hive over the past year. Overall summary: fewer bugs, better performance, more functionality. It brings support for rangesv3 under C++20, as well as stateful allocators and a few other things. Sentinel support for insert/assign is gone, as this is apparently done via ranges nowadays, and a few new functions are in there:

bool is_active(const const_iterator it) const

This is similar to get_iterator(pointer), but for iterators. For a given iterator, returns whether or not the location the iterator points to is still valid ie. that it points to an active non-erased element in a memory block which is still allocated and belonging to that colony instance. It does not differentiate between memory blocks which have been re-used, or whether the element is the same as when the value was assigned to the iterator - the only thing it reports, is whether the memory block pointed to by the iterator exists in an active state in this particular colony, and if there is an element being pointed to by the iterator, in that memory block, which is also in an active, non-erased state.

static constexpr size_type block_metadata_memory(const size_type block_capacity)

Given a block element capacity, tells you, for this particular colony type, what the resultant amount of metadata (including skipfield) allocated for that block will be, in bytes.

static constexpr size_type block_allocation_amount(const size_type block_capacity)

Given a block element capacity, tells you, for this particular colony type, what the resultant allocation for that element+skipfield block will be, in bytes. In terms of this version of colony, the block and skipfield are allocated at the same time (other block metadata is allocated separately). So this function is expected to be primarily useful for developers working in environments where an allocator or system will only allocate a specific capacity of memory per call, eg. some embedded platforms.

As a note on the latter function, I've experimented with allocating elements+skipfield+other-metadata in a single block, but it always ended up slower in benchmarks than when the other metadata was allocated separately. I'm unsure why that is, possibly something to do with the block metadata mostly not being touched during iteration, so keeping the elements+skipfield together but the other metadata separate may reduce the memory gap between any two given blocks and subsequently increase iteration speed - but I have no idea really.

Generally-speaking, I would prefer using hive over colony nowadays, if you and all your libraries have C++20 support - mainly because C++20 allows me to overload std::distance/next/prev/advance to support hive iterators, whereas because colony must function with C++98/03/11/14/17, it only supports ADL overloads of calls to those functions ie. without the std:: namespace. Also hive is significantly smaller due to the lack of C++03/etc support code - 168kb vs 196kb for colony, at the present point in time. hive lacks the data() function, which gives you direct non-chaperone'd access to colony's element blocks and skipfield, various other sundry functions like operator ==/!=/<=> for the container, and also the performance/memory_use template parameter, which allows colony to change some behaviours to target performance over memory usage. Hive is essentially a simplified and somewhat limited version of colony.

Speaking of the performance/memory template parameter for colony, the behaviour there has changed somewhat in the new version. It was found during discussions of hive (and subsequent testing on my part) with Tony Van Eerd that hive/colony actually performs better with an unsigned char skipfield and the resultant smaller maximum block capacities (255 elements max) when the sizeof(element_type) is smaller than 10 bytes. This makes sense when you think about it in terms of cache space. When the ratio of cache wasted by the skipfield, proportionate to the element sizeof, is too large, the effect of the larger skipfield type is, essentially, to reduce the cache locality of the element block reads by taking up more space in the cache.

When you reduce the skipfield type to a lower bit-depth, this also reduces the maximum capacities of the element blocks, due to the way the jump-counting skipfield pattern works. But it turns out the reduction in locality based on the lowered maximum block capacity is not as deleterious as the reduction in cache capacity from having a larger skipfield type. This effect goes away as the ratio of skipfield type sizeof to element sizeof decreases. So for a element type with, say, sizeof 20 bytes, a larger 16-bit skipfield type makes for better *overall* cache locality.

Hence the default behaviour now is to have an unsigned char skipfield type when the sizeof(element_type) is below 10 bytes, regardless of what the user supplies in terms of the performance/memory_use template parameter. However even in this case, the parameter will be referenced when a memory block becomes empty of elements and there is a decision of whether or not to reserve the memory block for later use, or deallocate it. If memory_use is specified, the memory block will always be deallocated in this case, unless it is the last block in the iterative sequence and there are no other blocks which have been reserved previously.

The latter is a guard against bad performance in stack-like usage, where a certain number of elements are being inserted/erased from the back of the colony repeatedly, potentially causing ongoing block allocations/deallocations if there are no reserved memory blocks. If instead performance (the default) is specified as the template parameter, blocks will always be reserved if they are the last or second-to-last block in the iterative sequence. This has been found to have the best performance overall in benchmarking, compared to other patterns I have tested (including reserving all blocks).

In addition if memory_use is specified, the skipfield type will always be unsigned char and the default max block capacity limit 255, regardless of the sizeof (element_type), so this much has not changed. Further, in benchmarking the latter, some non-linear performance characteristics were found during erasure when the number of elements was large, and these have subsequently been fixed.

The second thing to come out of the aforementioned testing was the discovery that a larger maximum block capacity, beyond a certain point, did not result in better performance, the reason for which is again, cache locality. When the element type is larger than scalar, beyond a certain point there is no gain from larger block capacities because of the limits of CPU cache capacity and cache-line capacity. In my testing I found that while the sweet spot for maximum block capacities (at least for 48-byte structs and above) was ~16383 or ~32768 elements in terms of performance, in reality the overall performance difference between that and 8192-element maximum capacities was about 1%. Whereas the increase in memory usage going from max 8192 block capacities to max 32768 block capacities was about 6%.

Below 8192 max, the performance ratio dropped more sharply, so I've stuck with 8192 as the default maximum block capacity limit when the skipfield type is 16-bit - this is still able to be overridden by the user via the constructor arguments or reshape, up to the maximum limit of the skipfield type. In regards to this, there are 4 new static constexpr noexcept member functions which can be called by the user prior to instantiating a colony:

These functions allow the user to figure out (a) what the default minimum/maximum limits are going to be for a given colony instantiation, prior to instantiating it, and (b) what potential custom min/max values they can supply to the instantiation.

Final note: why does a larger maximum block capacity limit not necessarily scale with performance? Well, you have to look at usage patterns. Given that element insertion location is essentially random in colony/hive, whether a given element in a sequence is likely to be erased later on is also independent of order (at least in situations where we do not want to, say, just get rid of half the elements regardless of their values or inter-relationships with other data). So we can consider the erasure pattern to be random most of the time, though not all of the time.

In a randomised erasure pattern, statistically, you are going to end up with a lot of empty spaces (gaps) between non-erased elements before a given memory block is completely empty of elements and can be removed from the iterative sequence. These empty spaces decrease cache locality, so it is in our best interest to have as few of them as possible. Given that smaller blocks will become completely empty faster than larger blocks, their statistical probability of being removed from the iterative sequence and increasing the overall locality, is increased. This has to be balanced against the lowered base locality from having a smaller block.

So there is always a sweet spot, but it's not always the maximum potential block capacity - unless the ratio of erasures to iteration is very low. For larger element types, memory fragmentation may play a role when the allocations are too large, as may the role of wasted space when, for example, you only have 10 additional elements but have allocated a 65534-element block to hold them in. This is why user-definable block capacity min/max limits are so important - they allow the user to think about (a) their erasure patterns (b) their erasure/insertion-to-iteration ratios and (c) their element sizeof, and rationalize about what the best min/max capacities might be, before benchmarking.

They also allow users to specify block capacities which are exactly, or multiples of, their cache-line capacities, and (via the block_allocation_amount function) match their block capacities to any fixed allocation amounts supplied by a given platform.

Holy carp this is a long blog post. Okay. Now onto the plf::list/plf::queue/plf::stack/etc changes. For plf::list the same changes regards adding ranges support and removing specific sentinel overloads occur. For all containers there are corrections to the way that allocators and rebinds are handled - specifically, stateful allocators are corrected supported now ie. rebinds are reconstructed whenever operator =, copy/move constructors or swap are called. For plf::list and colony it is no longer possible (above C++03) to incorrectly assign the value of a const_iterator to a non-const iterator, and for all containers the compiler feature detection routines are corrected/reduced in size. For even more info, see the version history on each container's page.

A bunch of thoughts on Carbon, in case anyone's interested.

Mostly I support the notion. Culling the dead weight? Great.

Public-by-default classes? As it should be (think about it - when you're prototyping and doing early experimentation, that is not the time to be figuring out what should be publicly visible - privatising members comes later).

Bitdepth-specific types by-default? Excellent!

A different syntax that is maybe, subjectively-better to some people... ehh.

Some aspects of the latter are in my view wrong. All natural languages start with generics and move to specifics (or with the most important thing in that culture first, then to the least), and so do programming languages. We have a heading with the general topic, then a paragraph with the specifics. We have a function name and the arguments, then we do something with those arguments. We would find it very odd to specify the name and arguments at the end of the function. This is not so much about word order as it is about making communicative sense.

With that in mind, consider the following english sentence:

"There was a being, a human named John, who was holding an apple."

We don't say:

"There was a being, whose name was John, a human, who was holding an apple."

because it moves from more specific (John) to less specific (human). Likewise we don't tend to say:

"An apple was being held by John, who was a human, and a being."

But Carbon variable declarations look like this:

var area: f32 = 1;

Break that down: var is the least specific aspect (because there are many, many vars), the type of var (f32) is the second least specific (because there may be many f32's), the name is more specific (because there is only one of this name) and the value is the most specific (because it is a value ascribed to the specific name).

So it should look like:

var f32 area = 1;

Except that 'var' is completely superfluous, in terms of human parsing at least (most vexing parse ignored for the moment).

In the following sentence:

"There was a being, a human named John, who was holding an apple."

"being" is implied by "human". We know that humans are beings, and therefore in most cases we would say:

"There was a human named John, who was holding an apple."

This seems more natural.

Similarly, I think saying:

f32 area = 1;

is perfectly natural, as 'var' is implied by f32, and a function cannot begin with f32, and functions are prefixed by 'fn' anyway. Thus we come back to C++ syntax. There may be a desire to move away from that, based on simply wanting something different, but I don't see a logical or intuitive case for it. Is the most vexing parse (the supposed logical reason for inclusion of 'var') really that big a problem? I'd never even heard of it before reading about Carbon.

Other thoughts: no easily-available pointer incrementation? Then how do you make an efficient vector iterator? Or a hive iterator, for that matter? (Don't say 'indexes' - every time I've benchmarked indexes vs pointers over the past ten years, pointer incrementation wins - you always have to have an array_start+index op for indexes, which is two memory locations, rather than a ++pointer op, which is one). Likewise, if pointers cannot be nulled, what does that mean? You still need a magic value to assign to pointers which no longer point to something - otherwise there's no way of checking whether a pointer is still valid, by a third party. Either I'm missing something in both of these cases, or there is a lack of clear thinking here.

There's also a few other things I would useful to fix. I don't see simplified support for multiple return values from a function. Something as straightforward as:

fn Sign(i: i32, j: i32) -> i32, i32

, where the return statement also supports comma-separated returns:

return 4, 5;

would be a simpler way forward than the current solution of tuple binding/unbinding.

Anyway. I think Carbon has potential, but as to whether it is going to be better than C++, or merely a substitute for people who like a particular way of doing things, with it's own share of downsides, remains to be seen. Jonathan Blow's 'jai' language started with a very clear vision - be a C-like replacement language specifically for games. I get the feeling that at this point, Carbon doesn't realise how specific it's vision is, and what fields it might be excluding. At the moment it's making containers harder to make, for example, by excluding pointer arithmetic. There's certainly time for people to get on board and move the goalposts, but for me personally I find honesty to be a necessity for any community, and the leads in Carbon have made it clear that they don't believe this is worth putting in their Code of Conduct.

This guide was developed to aid in people's thinking about which container they should be using, as most of the guides I'd seen online were rubbish. Note, this guide does not cover:

These are broad strokes and can be treated as such. Specific situations with specific processors and specific access patterns may yield different results. There may be bugs or missing information. The strong insistence on arrays/vectors where-possible is to do with code simplicity, ease of debugging, and performance via cache locality. I am purposefully avoiding any discussion of the virtues/problems of C-style arrays vs std::array or vector here, for reasons of brevity. The relevance of all assumptions are subject to architecture. The benchmarks this guide is based upon are available here, here. Some of the map/set data is based on google's abseil library documentation.

a = yes, b = no

0. Is the number of elements you're dealing with a fixed amount? 0a. If so, is all you're doing either pointing to and/or iterating over elements? 0aa. If so, use an array (either static or dynamically-allocated). 0ab. If not, can you change your data layout or processing strategy so that pointing to and/or iterating over elements would be all you're doing? 0aba. If so, do that and goto 0aa. 0abb. If not, goto 1. 0b. If not, is all you're doing inserting-to/erasing-from the back of the container and pointing to elements and/or iterating? 0ba. If so, do you know the largest possible maximum capacity you will ever have for this container, and is the lowest possible maximum capacity not too far away from that? 0baa. If so, use vector and reserve() the highest possible maximum capacity. Or use boost::static_vector for small amounts which can be initialized on the stack. 0bab. If not, use a vector and reserve() either the lowest possible, or most common, maximum capacity. Or boost::static_vector. 0bb. If not, can you change your data layout or processing strategy so that back insertion/erasure and pointing to elements and/or iterating would be all you're doing? 0bba. If so, do that and goto 0ba. 0bbb. If not, goto 1. 1. Is the use of the container stack-like, queue-like or ring-like? 1a. If stack-like, use plf::stack, if queue-like, use plf::queue (both are faster and configurable in terms of memory block sizes). If ring-like, use ring_span or ring_span lite. 1b. If not, goto 2. 2. Does each element need to be accessible via an identifier ie. key? ie. is the data associative. 2a. If so, is the number of elements small and the type sizeof not large? 2aa. If so, is the value of an element also the key? 2aaa. If so, just make an array or vector of elements, and sequentially-scan to lookup elements. Benchmark vs absl:: sets below. 2aab. If not, make a vector or array of key/element structs, and sequentially-scan to lookup elements based on the key. Benchmark vs absl:: maps below. 2ab. If not, do the elements need to have an order? 2aba. If so, is the value of the element also the key? 2abaa. If so, can multiple keys have the same value? 2abaaa. If so, use absl::btree_multiset. 2abaab. If not, use absl::btree_set. 2abab. If not, can multiple keys have the same value? 2ababa. If so, use absl::btree_multimap. 2ababb. If not, use absl::btree_map. 2abb. If no order needed, is the value of the element also the key? 2abba. If so, can multiple keys have the same value? 2abbaa. If so, use std::unordered_multiset or absl::btree_multiset. 2abbab. If not, is pointer stability to elements necessary? 2abbaba. If so, use absl::node_hash_set. 2abbabb. If not, use absl::flat_hash_set. 2abbb. If not, can multiple keys have the same value? 2abbba. If so, use std::unordered_multimap or absl::btree_multimap. 2abbbb. If not, is on-the-fly insertion and erasure common in your use case, as opposed to mostly lookups? 2abbbba. If so, use robin-map. 2abbbbb. If not, is pointer stability to elements necessary? 2abbbbba. If so, use absl::flat_hash_map<Key, std::unique_ptr<Value>>. Use absl::node_hash_map if pointer stability to keys is also necessary. 2abbbbbb. If not, use absl::flat_hash_map. 2b. If not, goto 3. Note: if iteration over the associative container is frequent rather than rare, try the std:: equivalents to the absl:: containers or tsl::sparse_map. Also take a look at this page of benchmark conclusions for more definitive comparisons across more use-cases and hash map implementations. 3. Are stable pointers/iterators/references to elements which remain valid after non-back insertion/erasure required, and/or is there a need to sort non-movable/copyable elements? 3a. If so, is the order of elements important and/or is there a need to sort non-movable/copyable elements? 3aa. If so, will this container often be accessed and modified by multiple threads simultaneously? 3aaa. If so, use forward_list (for its lowered side-effects when erasing and inserting). 3aab. If not, do you require range-based splicing between two or more containers (as opposed to splicing of entire containers, or splicing elements to different locations within the same container)? 3aaba. If so, use std::list. 3aabb. If not, use plf::list. 3ab. If not, use hive. 3b. If not, goto 4. 4. Is the order of elements important? 4a. If so, are you almost entirely inserting/erasing to/from the back of the container? 4aa. If so, use vector, with reserve() if the maximum capacity is known in advance. 4ab. If not, are you mostly inserting/erasing to/from the front of the container? 4aba. If so, use deque. 4abb. If not, is insertion/erasure to/from the middle of the container frequent when compared to iteration or back erasure/insertion? 4abba. If so, is it mostly erasures rather than insertions, and can the processing of multiple erasures be delayed until a later point in processing, eg. the end of a frame in a video game? 4abbaa. If so, try the vector erase_if pairing approach listed at the bottom of this guide, and benchmark against plf::list to see which one performs best. Use deque with the erase_if pairing if the number of elements is very large. 4abbab. If not, goto 3aa. 4abbb. If not, are elements large or is there a very large number of elements? 4abbba. If so, benchmark vector against plf::list, or if there is a very large number of elements benchmark deque against plf::list. 4abbbb. If not, do you often need to insert/erase to/from the front of the container? 4abbbba. If so, use deque. 4abbbbb. If not, use vector. 4b. If not, goto 5. 5. Is non-back erasure frequent compared to iteration? 5a. If so, is the non-back erasure always at the front of the container? 5aa. If so, use deque. 5ab. If not, is the type large, non-trivially copyable/movable or non-copyable/movable? 5aba. If so, use hive. 5abb. If not, is the number of elements very large? 5abba. If so, use a deque with a swap-and-pop approach (to save memory vs vector - assumes standard deque implementation of fixed block sizes) ie. when erasing, swap the element you wish to erase with the back element, then pop_back(). Benchmark vs hive. 5abbb. If not, use a vector with a swap-and-pop approach and benchmark vs hive. 5b. If not, goto 6. 6. Can non-back erasures be delayed until a later point in processing eg. the end of a video game frame? 6a. If so, is the type large or is the number of elements large? 6aa. If so, use hive. 6ab. If not, is consistent latency more important than lower average latency? 6aba. If so, use hive. 6abb. If not, try the erase_if pairing approach listed below with vector, or with deque if the number of elements is large. Benchmark this approach against hive to see which performs best. 6b. If not, use hive. Vector erase_if pairing approach: Try pairing the type with a boolean, in a vector, then marking this boolean for erasure during processing, and then use erase_if with the boolean to remove multiple elements at once at the designated later point in processing. Alternatively if there is a condition in the element itself which identifies it as needing to be erased, try using this directly with erase_if and skip the boolean pairing. If the maximum is known in advance, use vector with reserve().

I tend to think of the spaceship operator as a classical example of over-thinking - like the XKCD comic where there's too many standards, so they add another standard. Unfortunately the documentation surrounding it is close to opaque in terms of referencing standardese that no clear-minded student would even consider trying to understand. I've read it and I still don't understand it.

Having said that, it is now implemented in all containers. I had also thought about adding a function to colony/hive which gives the user a way to assess the memory usage of the metadata (skipfield + other stuff) for a given block capacity, then I realised one can calculate this by creating a colony with the given block capacity as a minimum capacity limit, then calculating memory() - (sizeof(colony<t>) + (capacity() * sizeof(t))). But as a convenience function I've put static constexpr size_type block_metadata_memory(const size_type block_size) in colony, for the moment.

Where do we go wrong with our thinking about categories? I like to think of the classic example of the lump of wood and the chair. Take a lump of wood and by degrees gradually transform it into a chair. At what point does it become a chair? Aristotelian logic does not have an answer. Fuzzy logic, sort of, does. By degrees, it is a chair - that is to say at a certain point it maps 40% to what most of us would consider a chair, based on what our brains input of what a 'chair' is (based on examples we have been given) and our own personal aggregate experience of chairs. Then later, 55% a chair, and so on and suchforth. The question is wrong: it's not, "when is it a chair?" or worse, "when do we all agree it's a chair?" - it's "to what extent does it map to our concept of a chair?". The reality is, there's just stuff, and stuff happening. There's a particular configuration of atoms, and we map that to the concept 'chair' based on what is valuable to us. If a chair didn't have any utility to us (be that function or beauty (beauty being an attraction to what is functional to us in some manner, biologically-speakin')) we wouldn't bother to name it, most likely.

A chair doesn't exist, our concept of chair utility exists, and we map that onto our environment. The question of 'what is art?' follows the same guidelines, as art has multiple utilities that are not consistent from 'artwork' to 'artwork'.

So now we get onto the programming side of things. Classical polymorphism is an extension of this original misunderstanding - class chair, subclass throne, subclass beanbag etc. At what point is it not 'of class chair'? That's the wrong question. Map to the utility. And since things rarely have less than two modes of utility, this is where data-oriented design steps in. I'm thinking specifically of ECS design in this case. What properties does this object have? Yes it's a chair, but is it breakable? Is it flammable? What are the modes of utility we are mapping for in any given project? After that point, apply whatever label you want. Whereas polymorphism over-reaches and falsely assigns the importance of the label itself as being comparable to the utility of the object.

It turns out this is a far more useful way of thinking about things, albiet one that requires more care and attention to specifics. One that matches our biology, not the aristotelian abstraction that's been ladled on top of that for millenia. For starters it's much easier to extract the utility, and secondly it's a more efficient way of -thinking- about the objects. We are just a collection of atoms. Programs are just a collection of bytes. Map to that.

To be clear, I'm still running with hive for the standards committee proposal, however I realised that the proposal is significantly divergent from the colony implementation, so I've setup hive as it's own container, separate from colony. The differences are as follows:

The result is a container which (currently as of time of writing) only runs under GCC10, until MSVC and clang get their act together regards C++20 support, and which is 24kb lighter. Hive won't be listed on the website here as it doesn't fit the PLF scope ("portable library framework", so C++98/03/11/14/17/20 and compatible with lots of compilers), however it'll be linked to on the colony page.

The other advantage of keeping colony separate from hive is that colony is insulated from any future changes necessitated to hive, meaning it becomes a more stable platform. Obviously any good ideas can be ported in between hive and colony.

There was a casual minor mistake in the Random access of elements in data containers which use multiple memory blocks of increasing capacity paper (also know as 'POT blocks bit tricks', now updated). The 5th line of the equation should've read "k := (b != 0) << (b + n)" but instead read "k := (j != 0) << (b + n)" which neither matched the earlier description of the equation in the paper, nor my corresponding C++ code. This resulted in the technique not working in the first block, which it does now if you follow it through.

While it might've been noticed by some of the more observant, colony is now optionally typedef'd as hive, as this is the name the committee feels more comfortable with going forward. In terms of my implementation the default name will always be colony, as that's the name I am most comfortable with. There is a paper which explains the name change here. The typedef is only available under C++11 and above due to the limitations of template typedefs in C++98/03. plf::colony_priority and plf::colony_limits are also typedef'd as plf::hive_priority and plf::hive_limits. I'm not... 100% happy about the change, but c'est la vie.

In order to reduce the memory footprint of plf::queue compared to std::queue, there are now two modes that can be selected as a template parameter. 'Priority' is now the second template parameter, before Allocator, as I figure people will likely use Priority more. But at any rate, the new default mode, priority == plf::memory, tends to break the block sizes down into subsets of size(), whereas the original mode, priority == plf::speed, tends to allocate blocks of capacity == size(). The performance loss is relatively low for plf::memory and is still faster than std::deque in a queue context, but the memory savings are massive. More information can be found in the updated 'real-world' benchmarks.

Let's explore the idea of basing design of a product on benchmarks. There are a number of flaws with this: for starters, you can't do an initial design based on benchmarks, you have to build something to benchmark first. So you need an initial concept based on logic and reasoning about your hardware, before you can begin benchmarking. But, in order to get that initial logic, you have to know basic performance characteristics and how they are achieved on a code level - and the only way to know that is via benchmarks either yourself or someone else has done on other products in the past (or having an in-depth mechanical knowledge of how things are working on the hardware level). Otherwise you're just theorising about the hardware, which has limitations.

The second flaw is that, of course, benchmark results will change based on your platform (and compiler) - a platform with a cacheline width of, say, 4k, will benchmark differently from one with a width of 64k. But unless you actually benchmark your code on at least One platform, you don't really know what you're dealing with. And most platforms are reasonably similar nowadays in the sense of all having heavily-pipelined, branch-predicted, cache-locality-driven performance.

So I'm exploring the idea here of benchmark-based design, which is, beyond the initial drawing board part of design, at a certain point you flip over into basing design changes based on what your benchmarks tell you. Nothing revelatory here, something which most companies do, if they're any good. But you want to put the horse before the cart - this involves trying things you think might not work, as well as things you think might. You might be surprised how often your assumptions are challenged. The important part of this is discovering things that work better, then working backwards to figure out how and why they do that.

I've found this kind of workflow doesn't sync well with the use of git/subversion/mercurial/etc, as often I'm benchmarking up to 5 different alternative approaches at once across multiple types of benchmark (if not several different desktops) to see which ones work best. That's how I get performance. But tracking that number of alternative branches with a git-like pipeline just overcomplicates things to no end. So my use of github etc is purely functional - it provides a common and accepted output for those wanting the stable builds.

To expand upon this, plf::queue's original benchmarks were based on the same kind of raw performance benchmarks I used with plf::stack - however this was, somewhat, inappropriate, given that the way a queue is used is very different from the way most containers are used. So I put together a benchmark based on more 'real world' queue usage - that is to say, having an equal number of pushes and pops most of the time, then increasing or decreasing the overall number of elements at other points in time - and tracking the overall performance over time. The intro stats on the queue homepage have been updated to reflect this.

This obviously yielded somewhat different results to the 'raw' stack-like benchmarks, and while the results were still good, I felt I could do better. After reasoning about what the blocks in the stack were doing for a while, I tried a number of different approaches, including some I thought logically couldn't improve performance, and found that some of the more illogical approaches worked better. But I also tracked memory usage and found that the more 'illogical' techniques were using lower amounts of memory, and were also able to re-use the blocks more often, which explained things. Using this new knowledge I was able to construct new versions which not only used less memory (more-or-less on a par with std::queue) but performed better.

Benchmarking in this way is tedious - any good benchmark has to be tested against multiple element types and double-checked on alternative sets of hardware. but when I see people out there making performance assumptions about code, when they haven't actually benchmarked their assumptions, I know this is the right way. You can't guarantee performance if you don't perform.

The most recent v2.0 plf::list update is more of a maintenance release than anything revelatory - though it does fix a significant bug in C++20 sentinel range insert (insert(position, iterator_type1, iterator_type2)), adds sentinel support to assign, overhauls range/initializer_list insert/assign with the same optimisations present in fill-insert, and adds support for move_iterator's to range insert/assign.

The other thing it does is add user-defined sort technique use to sort() - via the PLF_SORT_FUNCTION macro. Ala colony, the user must define that macro to be the name of the sort function they want sort() to use internally, and that function must take the exact same parameters as std::sort(). This means you can use std::stable_sort, and others like gfx::timsort. In addition that macro must be defined before plf_list.h is #included. If it is not defined, std::sort will be the function used. It still uses the same pointer-sort-hack to enable sorting, but for example, stable sorting may be more useful in some circumstances.

Taron M raised the idea of implementing a faster queue, given that std::queue can only run on deque at best, and std::deque's implementation in both libstdc++ and MSVC mean that it effectively becomes a linked list for element types above a certain size. Which is a bit bogus. The result is plf::queue, which is a *lot* faster than std::queue in real-world benchmarking, as in:

* Averaged across total numbers of elements ranging between 10 and 1000000, with the number of samples being 126 and the number of elements increasing by 1.1x each sample. Benchmark in question is a pump test, involving pushing and popping consecutively while adjusting the overall number of elements over time. Full benchmarks are here.

Structurally it's very similar to plf::stack, which made it relatively easy to develop quickly - multiple memory blocks, 2x growth factor, etcetera. We initially spitballed a few interesting ideas to save space in the event of a ring-like scenario, such as re-using space at the start of the first memory block rather than creating a new one; but ultimately the overhead from doing these techniques would've both complicated and killed performance. Also, it has shrink_to_fit(), reserve(), trim() and a few other things, much like plf::stack.

I was investigating a particular trick I'd used for 4-way decision processes in colony, as part of my speech writing process for my talk at CPPnow! 2021. Initially I held my current line, which was that using a switch for this process resulted in better/faster compiler output, both in debug and release builds. This used to be true at one point, however I realised that was a long time ago, and the container had changed since then, and the compilers had changed substantially since then, sooo. Was this still true?

The trick itself involves situations where you have a 3 or 4-way if/then/else block based on the outcome of two boolean decisions, A and B. You bitshift one of the booleans up by one, combine the numbers using logical OR, and put your 3/4 outcomes in 3 switch branches. Initially I did some assembly output analysis based on this simple set of code:

int main()

{

int x = rand() % 5, y = rand() % 2, z = rand() % 3, c = rand() % 4, d = rand() % 5;

const bool a =(rand() % 20) > 5;

const bool b =(rand() & 1) == 1;

if (a && b) d = y * c;

else if (a && !b) c = 2 * d;

else if (!a && b) y = c / z;

else z = c / d * 5;

return x * c * d * y * z;

}vs this code:

int main()

{

int x = rand() % 5, y = rand() % 2, z = rand() % 3, c = rand() % 4, d = rand() % 5;

const bool a =(rand() % 20) > 5;

const bool b =(rand() & 1) == 1;

switch ((a << 1) | b)

{

case 0: z = c / d * 5; break;

case 1: y = c / z; break;

case 2: c = 2 * d; break;

default: d = y * c;

}

return x * c * d * y * z;

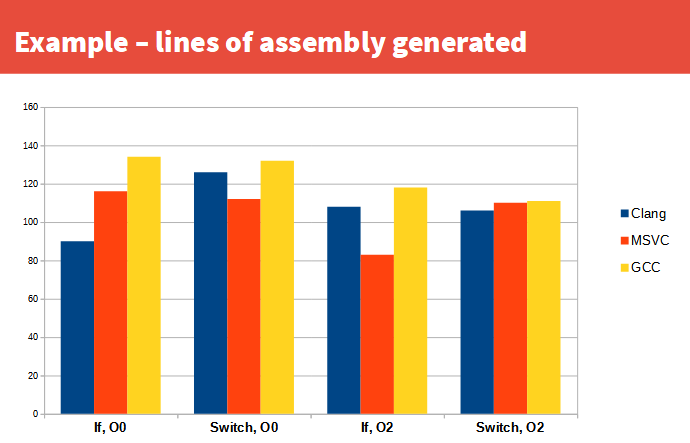

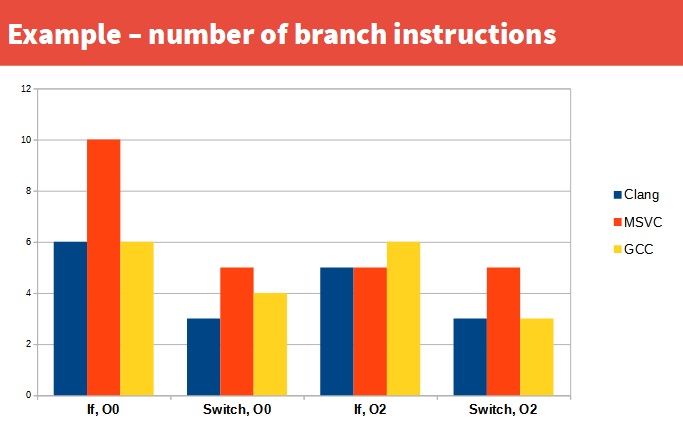

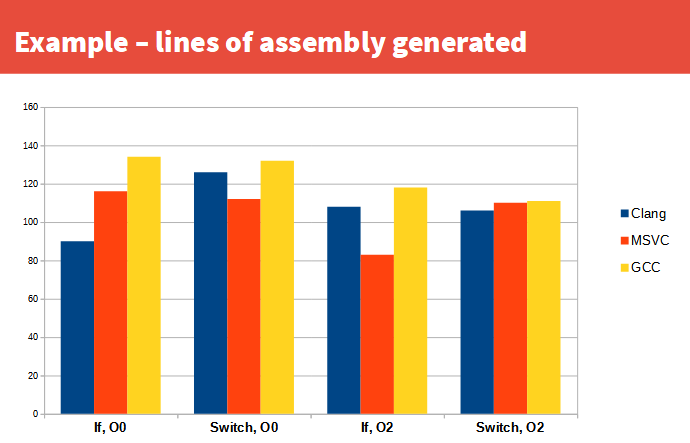

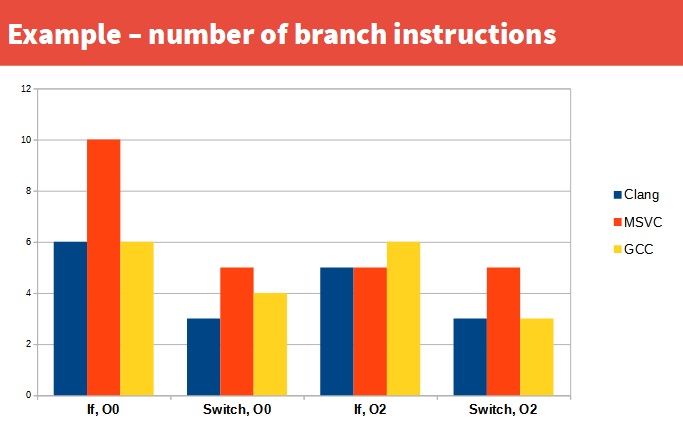

}And, indeed the switch version produced about half the number of branching instructions, at both O0 and O2. However this was inconsistent between compilers (MSVC was the worst, producing 10 (!) branching instructions for the O0 build. How? Also - WWWWhyyyyy???). Here's some graphs from the (now removed) slides:

As you can see there's a significant difference there. All compilers are their most recent non-beta versions at the time of writing. But assembly analysis is not benchmarking. So I proceeded to change all the four-way branches in colony itself to a variety of different configurations - some using switch as above, some using if, some using if but with logical AND instead of && as above. I tested across a wide variety of benchmarks in the benchmark suite, and found that I was wrong. The switch variant was no longer in the fore as far as performance was concerned, though it was for earlier versions of GCC (circa GCC5). In the end I went with a logical AND variant, as it seems to have better performance across the largest number of benchmarks.

So now you know - doing a C++ talk can sometimes result in better code.

Also, I now have a new paper, as promised - on modulated jump-counting patterns. These allow a user, for example, to have 2 or more different types of objects in the array/storage area which the skipfield corresponds to, and conditionally skip-or-process either type of object during iteration. For example, in an array containing red objects and green objects, this allows the dev to iterate over only the red objects, or only the green objects, or both, with O(1) ++ iteration time. It is an extension and modification of jump-counting skipfields and can be used with either the high or low-complexity jump-counting skipfields. I am unsure of what this might be entirely useful for at this point - possibly some kind of very specific multiset.

I have another paper in the pipeline, this time describing a new application of modified jump-counting skipfields, but this paper is something different:

Random access of elements in data containers using multiple memory blocks of increasing capacity

It describes a method which allows the use of increasingly-large memory block capacities, in random-access multiple-memory-block containers such as deques, while retaining O(1) access to any element based upon it's index. It's not useful for colony, but it may be useful for other things. I have a custom container based on this principle, but it's not ready for primetime yet - it hasn't been optimized. Ideally I'd get that out the door before releasing this, but we none of us know when we're going to die, so might as well do it now.

The method requires a little modification for use in a deque - specifically, you would have to expand the capacities in both directions, when inserting to the front or back of the container. But is doable. I came up with the concept while working on plf::list, but have subsequently seen others mention the same concept online in other places. But no-one's actually articulated the specifics of how to do it, which is what this paper intends.

Hopefully is of use.

If I could summarise data-oriented design in a heartbeat, it would be that:

traditional comp sci refocuses on micro perf too much, whereas most of the actual performance changes happen at the macro structure level, and what constitutes optimal macro level structure largely changes from platform to platform so it's better to provide developers with the right tools to fundamentally manage their own core structures and code flow with ease and simplicity, to match those platforms, rather than supplying those structures yourself and pretending the micro-optimisations you're doing on those structures are going to have a major impact on performance.

Making things more complicated in order to obtain minor performance optimisations (like the majority of std:: library additions) obscures and complicates attempts to make the real macro changes. Memorising the amount of increasingly verbose nuance necessary to get the nth-level performance out of the standard library does not contribute necessarily to better code, cleaner code, or more importantly, better-performing code.

I note this with full awareness of the part I have to play in bringing colony to the standard, or attempting to do so. It is better to have this thing than not to have it; that's the core thing. It is an optional macro level structure that can have a fundamental impact on performance if used instead of, say, std::list or vector in particular scenarios. But the amount of bullcrap I've had to go through in terms of understanding all the micro-opt/nuance stuff in C++ like allocators vs allocator_traits, alignment, assign, emplace vs move vs insert etc, has been a major impediment to progress.

Ultimately fate will decide whether this was worth it. I'm erring on the side of 'maybe'. Object orientation isn't going away anytime soon, so it's best that programmers have adequate tools to reach performance milestones without reinventing wheels.

In summary, Keep It Simple + think macro-performance, largely.

[Update - in '22 I realised that the version I had of colony which was purported to be constexpr was in fact not, because constexpr cannot utilize reinterpret_cast - at the time of writing this I did not realise this, and also the bugs and performance issues I noted were compiler bugs which finally disappeared in either late '22 or early '23].

Constexpr-everything hasn't been critically-assessed. There is the potential for cache issues when constexpr functions return large amounts of data, or when functions returning small amounts of data are called many times, for example, and the issue of where that data is stored (stack or heap) and how long it is available for (program lifetime?). There is an awful mess of issues for any respectable compiler to sort through, and I don't envy those developers.